The Netflix Algorithm That Knows You Better Than You Know Yourself

You're thinking about watching something light and funny, but not too silly. Before you even search, Netflix serves up the perfect show. Coincidence or Recommendations reading your mind or behaviour?

Last Tuesday at 11:47 PM, you opened Netflix. You weren’t sure what you wanted—maybe something light, maybe nostalgic, definitely not too intense. Within seconds, “The Good Place” appeared. You’d never searched for it, never added it to your list. But it was exactly right.

You clicked play without thinking.

Here’s what makes that moment remarkable: Netflix didn’t get lucky. They didn’t guess. And they definitely didn’t read your mind. What they did was somehow more fascinating—and it happened because their engineers did something that sounds completely insane.

They treated you like a sentence.

The Problem: When Good Systems Become Costly

By 2024, Netflix had built something impressive: dozens of specialized recommendation models, each optimized for a specific task. One model predicted what you’d watch next. Another figured out which thumbnail you’d click. Another understood whether you were in “Continue Watching” mode or “Discovery” mode. Each model was good at its job. Each one had been carefully trained.

But the system had a hidden cost.

Every time they wanted to improve recommendations, they had to update multiple models. Every innovation in one model stayed trapped there — it was difficult to transfer improvements to others, even though they all used the same underlying data. The maintenance burden was growing. The complexity was increasing. Netflix’s engineers described it as “quite costly” to maintain and scale.

They needed a new architecture. One where learning about user preferences could be centralized, where innovations could spread across the entire system, where the machine could learn from itself.

In March 2025, three Netflix engineers — Ko-Jen Hsiao, Yesu Feng, and Sudarshan Lamkhede—published something on Netflix’s tech blog that needs attention.

They describe they had scrapped their entire recommendation architecture. All those specialized models? Gone. In their place: a single Foundation Model that treats human behavior exactly the way ChatGPT treats language.

Here’s the insight that unlocked everything: your viewing behavior isn’t random. It has patterns, structure, grammar. Just like words in a sentence follow rules, your clicks, pauses, and choices follow patterns. You’re not a random number generator. You’re a story being written in real-time, and Netflix taught an AI to read that story.

They call it “interaction tokenization”.

How Netflix Turned Your Tuesday Night Into Tokens

Think about how ChatGPT works. When you type “The quick brown fox,” it doesn’t see those words. It sees tokens—individual units of meaning that can be combined and recombined. The model has learned, from billions of examples, how tokens flow together. It knows what usually comes after “The quick brown” because it has seen the patterns.

Netflix did the exact same thing with your behavior.

When you opened the app last Tuesday at 11:47 PM, Netflix didn’t just see a user logging in. They saw a sequence of tokens:

Token 1: “User opens app at 11:47 PM on Tuesday”

Token 2: “Browses comedy section for 23 seconds”

Token 3: “Hovers over ‘The Good Place’ thumbnail for 1.8 seconds”

Token 4: “Clicks play”

Each action is a word. Your evening is a sentence. Your viewing history over months is a paragraph, a chapter, a book about who you are and what you want.

And their Foundation Model has learned to predict the next word in your story.

The Scale: Bigger Than You Think

Netflix has over 300 million subscribers. Each one generates thousands of these behavioral tokens every month—clicks, pauses, scrolls, plays, stops, rewinds, skips. The volume of data Netflix processes isn’t just comparable to the text used to train ChatGPT.

It’s arguably richer.

They process over billion events per week. Every single one is a token in the massive language model of human entertainment preferences they’re building.

But here’s what makes it powerful: they’re not just collecting data. They’re using the same transformer architecture that powers GPT—the attention mechanisms, the self-attention layers, the multi-token prediction—all adapted for understanding you.

What Happened in Those Milliseconds

When you opened Netflix at 11:47 PM, here’s what their Foundation Model actually did:

Step 1: Context Building

The model pulled up your entire interaction history — potentially hundreds or thousands of past tokens. Not just what you watched, but when you watched it, how long you stayed, what you skipped, what you rewatched.

Step 2: Pattern Recognition Through Attention

Using transformer attention mechanisms (the same technology that makes ChatGPT work), the model identified which parts of your history were most relevant right now. It noticed you tend to watch character-driven comedies on Tuesday evenings when you’re browsing slowly. It saw the pattern even if you didn’t.

Step 3: Multi-Token Prediction

Here’s where it gets impressive. The model didn’t just predict you’d watch “The Good Place.” It predicted you’d watch three episodes, take a break around midnight, and probably come back tomorrow evening. It predicted the next several tokens in your story.

Step 4: The Recommendation

All of this happened in milliseconds. Before you even finished opening the app, Netflix had read your story and predicted the next chapter.

And they were right.

The 60-Second Window: Why This Matters So Much

Netflix knows something most people don’t: you’re about to leave.

Their research shows you have 60 to 90 seconds to find something to watch. That’s it. If you haven’t found something that grabs you within that window, you’re opening YouTube, or scrolling Instagram, or just turning off the TV.

During those 60-90 seconds, you’ll look at maybe 10-20 titles. You’ll actually consider 3 or 4 of them seriously. You’ll scroll through 1-2 screens. And then you’ll either start watching something, or you’ll leave.

This is why the Foundation Model matters. They don’t have time to show you random suggestions. They don’t have time to let you browse aimlessly. They have 60-90 seconds to read your story and predict the perfect next chapter.

The stakes are real. Netflix executives have stated that their recommendation engine saves the company about $1 billion per year by preventing customer churn. That was back in 2016, when they had 93 million subscribers. Today, with 300+ million subscribers, the real number is likely much higher.

They built this system because they had to. Every failed recommendation is a potential canceled subscription.

The Details: Inside the Tokenization

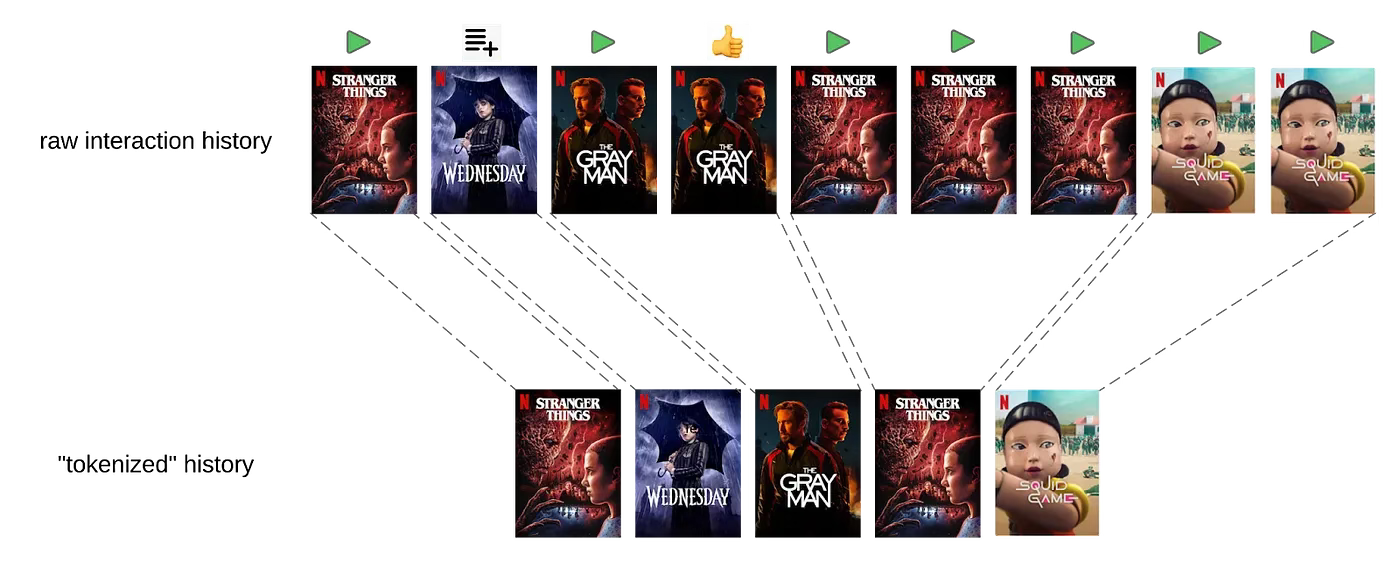

Netflix’s “interaction tokenization” isn’t just a metaphor. It’s their actual technical approach, inspired directly by how language models break down text.

Just like language models use Byte Pair Encoding (BPE) to merge common character sequences into tokens, Netflix merges adjacent user actions into higher-level behavioral tokens. They don’t treat every click as equally important. They identify meaningful sequences and compress redundant information.

For example:

- Dozens of rapid scrolls might become one token: “Fast browsing mode”

- Hovering plus clicking plus watching 30 seconds might become: “Engaged with content”

- Opening app on Tuesday evening might trigger: “Weekly wind-down pattern”

The system learns what constitutes a meaningful token by watching billions of user journeys and finding the patterns that actually predict future behavior.

The Cold Start Problem: Predicting What Nobody Has Seen

Here’s where the Foundation Model becomes genuinely impressive: it can predict how much you’ll like content that doesn’t exist yet.

When Netflix is about to launch a new show, nobody has watched it. Traditional recommendation systems break down—they need viewing data to make predictions. But the Foundation Model uses a hybrid approach, combining learnable title ID embeddings with metadata embeddings (genre, cast, director, themes) weighted by an attention mechanism.

For a brand new show with zero views, the model initializes its understanding by looking at similar content and adding slight random variations. As the first users start watching, the model updates in real-time, refining its predictions with each new data point.

This means Netflix can predict your reaction to their latest release before anyone in the world has watched it. They’re predicting the future based on understanding patterns in the past.

The Art of the Thumbnail: How They Hook You Visually

Netflix ran eye-tracking studies. They brought people into labs, strapped sensors to their heads, and watched exactly how humans browse for entertainment.

The findings were precise: artwork isn’t just important to your decision—it constitutes over 82% of your focus when browsing. The image is the decision.

So Netflix optimized. They run A/B tests on thumbnails at massive scale. They’ve learned that faces with complex emotional expressions outperform stoic poses. That images with 3+ people show decreased engagement. That the right artwork can produce 20-30% increases in viewings.

And here’s the fascinating part: they personalize the artwork. The thumbnail you see for “The Good Place” might be different from mine. They’re not just predicting what you’ll watch—they’re predicting which image will make you click.

It’s recommendation systems all the way down.

Why This Isn’t Just Netflix

If you think Netflix is alone in this, you haven’t been paying attention.

Spotify adopted Meta’s Llama 3.1 in December 2024 for contextualized music recommendations. They’re using large language models to generate AI DJ commentary and recommendation explanations, reporting 4× higher click-through rates for niche content. They’re actively hiring “Generative Recommenders” engineers with transformer expertise.

YouTube deployed transformers for music recommendations in August 2024. Their models process user action sequences of hundreds to thousands of events, using self-attention to understand context—like gym versus home listening patterns. Skip rates went down. Session length went up.

Amazon uses History-Aware Transformers for personalized outfit recommendations and transformer-based substitute recommendations across 11 marketplaces. In September 2024, they integrated foundation models for personalized product descriptions with evaluator LLMs for quality control.

This is the pattern: every major recommendation platform is converging on the same insight. Transformers. Attention mechanisms. Foundation models. The technology that made ChatGPT possible is being adapted to predict human behavior across every platform you use.

The Technical Reality: How Close Is This to Mind Reading?

Let’s be precise about what Netflix actually built.

Architecture: Transformer encoders with multi-head attention, similar to GPT. The model uses sparse attention techniques with low-rank compression to extend context windows to several hundred events.

Training objective: Autoregressive next-token prediction, exactly like language models. Their blog post literally states: “Our default approach employs the autoregressive next-token prediction objective, similar to GPT.”

Multi-token prediction: The model predicts the next “n” tokens at each step instead of a single token, helping it understand longer-term dependencies. This approach was inspired by a 2024 paper on improving language models.

Embeddings: Learnable item ID embeddings combined with learnable metadata embeddings. An attention mechanism weights their importance based on entity “age”—new content relies more on metadata, established content relies more on viewing patterns.

Incremental training: The system warm-starts new models by reusing parameters from previous models, maintaining consistency while continuously improving.

This isn’t marketing language. This is their actual architecture, published on their official tech blog in March, 2025.

The Evolution: From Predicting Actions to Predicting Intent

Four months after launching their Foundation Model, Netflix made it even more sophisticated. On July, 2025, they shared FM-Intent— and this is where things get genuinely interesting.

The Foundation Model could predict what you’d watch next. FM-Intent predicts why you’re opening Netflix in the first place.

Here’s the difference: when you opened the app last Tuesday at 11:47 PM, the Foundation Model saw your behavioral tokens and predicted you’d watch “The Good Place.” But FM-Intent went deeper. Before predicting what you’d watch, it predicted your intent for that session:

Action Type: Are you here to discover something new, or continue what you started?

Genre Preference: Which genre are you craving right now—comedy, thriller, drama?

Format Intent: Are you in a movie mood (one longer experience) or a TV show mood (multiple shorter episodes)?

Recency Preference: Do you want newly released content, recent additions, or classic catalog titles?

The model doesn’t just guess these. It predicts them using what Netflix calls “hierarchical multi-task learning” — it predicts your intent first, then uses that predicted intent to recommend specific content. It’s a two-step process that happens in the same milliseconds.

And it’s more accurate because of it.

The Breakthrough

In their offline experiments, FM-Intent showed a 7.4% improvement in next-item prediction accuracy compared to their previous best model. That might sound small, but at Netflix’s scale — 300+ million users, billions of interactions — 7.4% is massive.

But the really fascinating part isn’t the accuracy improvement. It’s what FM-Intent reveals about you.

Your Intent Cluster: Netflix Knows Your Viewing DNA

FM-Intent generates something called “intent embeddings” —mathematical representations of why you watch what you watch. When Netflix ran clustering analysis on these embeddings, they discovered they could categorize all 300+ million users into distinct intent patterns.

Some clusters they found:

- Discoverers vs. Continuers: Users who primarily hunt for new content versus those who stick with favorites and return to recent watches

- Genre Enthusiasts: Anime devotees, kids content viewers, documentary fans—people with strong genre preferences

- Viewing Pattern Types: Binge-watchers versus casual viewers, rewatchers versus one-time watchers

You’re in one of these clusters right now. Netflix knows which one.

What They Do With Your Intent

FM-Intent isn’t just about better predictions. It’s being used across Netflix’s entire system:

Personalized UI Optimization: The layout you see on your homepage is influenced by your predicted intent. If the model predicts you’re in “discovery mode,” you see different rows than if you’re in “continue watching” mode.

Real-Time Search: When you search for something, your predicted session intent influences which results appear first. Same search query, different results, based on what Netflix thinks you’re trying to accomplish right now.

Analytics and Content Decisions: Your intent clusters inform what content Netflix should acquire or produce. If millions of users are clustering into “newly released thriller” intent patterns, that’s a signal.

Enhanced Recommendations: Your predicted intent becomes a feature for other recommendation models, making the entire system smarter.

The system doesn’t just predict what you’ll watch. It predicts why you’re here, then uses that to show you exactly what you need.

What This Means: The Uncomfortable Question

Here’s the thing that keeps me up at night: if Netflix’s Foundation Model can predict your next choice with this level of accuracy, and FM-Intent can predict your underlying intent before you’ve consciously recognized it yourself, who’s really making the decision?

You opened the app wanting something light and nostalgic. FM-Intent predicted you were in “continue watching” mode with a preference for character-driven comedy. Netflix showed you “The Good Place.” You clicked.

But here’s the question: did you choose it, or did Netflix’s models predict your choice and intent so accurately that they removed the other options from consideration?

The Foundation Model predicts entire sequences of future behavior. FM-Intent predicts the psychological state driving those behaviors. Together, they’re not reading your mind—they’re predicting your actions and motivations before you’ve consciously decided on them.

That’s not the same thing as mind reading. But I’m not sure it’s entirely different, either.

The Future Is Already Processing Your Intent

Netflix’s Foundation Model and FM-Intent are running right now. At data centers around the world, they’re processing hundreds of billions of behavioral tokens from 300 million users. They’re learning patterns, predicting sequences, understanding intent, optimizing recommendations.

And they’re getting better every day.

The next time Netflix shows you the perfect recommendation at exactly the right moment, remember: they didn’t guess. They built a language model for human behavior, trained it on 1.8 trillion annual interactions, taught it to understand your intent, and used it to predict your story before you’ve finished writing it.

They treated you like a sentence. They predicted your intent before you recognized it yourself. And they predicted the next word.

That’s not mind reading. But watching it work feels pretty damn close.

Want to see it in action?

Open Netflix right now. Look at what they’re recommending. Ask yourself: how did they know? Then remember—FM-Intent just predicted why you opened the app, the Foundation Model processed thousands of your past interactions, cross-referenced them with patterns from 300 million other users, and predicted what you need right now.

Welcome to the LLM future. It’s been watching you — and understanding you — the whole time.

Sources

- Netflix Technology Blog: “Foundation Model for Personalized Recommendation” (March 29, 2025)